SEE-THROUGH-SOUND

|

SEE-THROUGH-SOUND |

|

Tomás Henriques, Buffalo State College NY, USA and CESEM (FCSH), Portugal

Sofia Cavaco, CITI, Faculdade de Ciências e Tecnologia, Universidade Nova de Lisboa, Portugal

Michele Mengucci, Faculdade de Ciências e Tecnologia, Universidade Nova de Lisboa, Portugal and Lab-IO, Portugal

Collaborators

Nuno Correia, CITI, Faculdade de Ciências e Tecnologia, Universidade Nova de Lisboa, Portugal

Francisco Medeiros, Lab-IO, Portugal

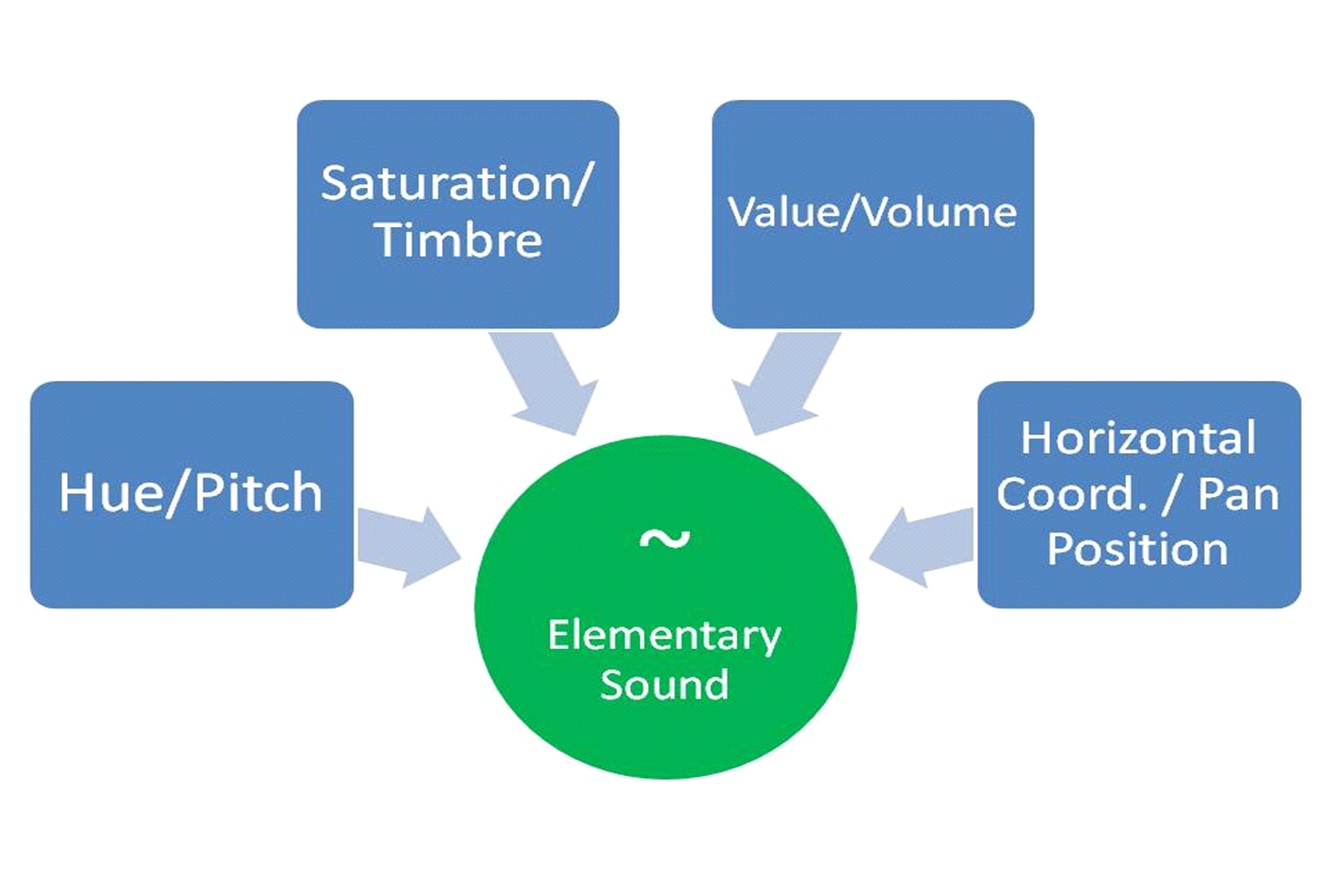

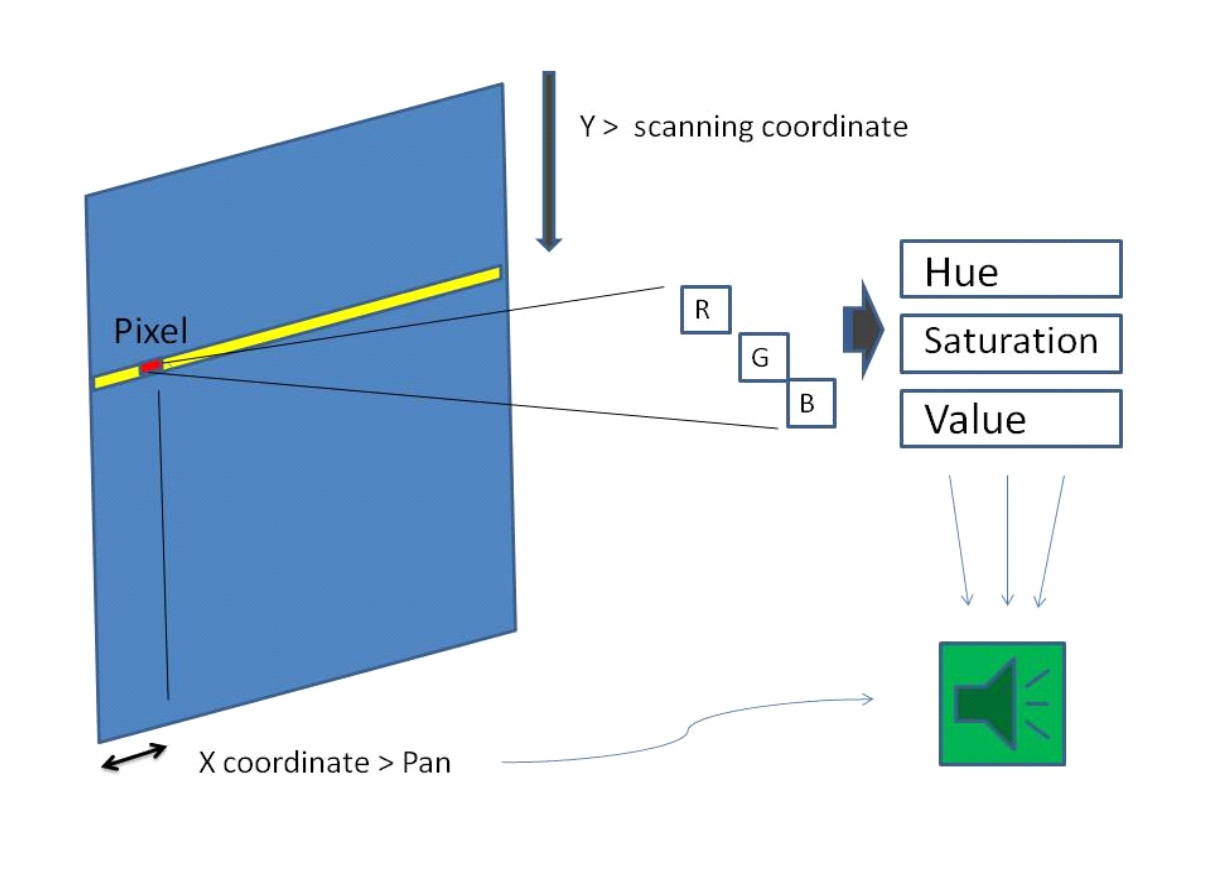

ObjectiveCreate a device that converts color information from live video and still images into sound, mapping color attributes to sound parameters such as pitch, timbre, volume and sound location. The tool aims specifically at aiding the visually impaired to navigate through and assess the surrounding environment. It can also help anyone to sonically decode visual information, without directly observing or having access to its source. ApproachA digital image is a composition of discrete elementary units of color/light, its pixels. Our research deals with the conversion of a digital image into its sonic print, where each pixel and pixel properties have an associated sound. Therefore, when a single image or video frame is "played", the result is a composition of elementary sounds originated directly by the pixels' values. These sound events convey what the camera captures, giving information about the colors that surround the user, the amount of darkness/light, and the location of light sources. The prototype can process either a still image or a live video feed, weaving a tapestry of sonic particles of varying complexity. The flow of the synthesized audio depends on the camera's scanning rate, which is user controlled. A high rate works best if fast information about the environment is necessary, as when moving in crowded urban areas; a low rate is more suited if details of a scene are needed. Mapping Color to SoundTo convert image into sound we use the Hue, Saturation and Value (HSV) color model where Hue contains information about the "pure color" of the pixel. Saturation, measures the deviation of the color from gray and Value conveys information about the color's brightness. The HSV attributes of the pixels are mapped into sound parameters, respectively as pitch, timbre and volume. A fourth parameter, the pixel's abscissa, was added to locate the sound within the horizontal plane (fig. 1). The pitch output of an HSV value can be assigned to user defined pitch ranges, from one to four octaves and with different frequency resolutions. The prototype scans the images from top to bottom making an interpolation of the HSV values over 12 segments (columns) along the rows and generates a sound for each of the segments it plays (fig. 2). Images are played as they are being scanned. |

Fig. 1 - Pixels' HSV to sound mapping

Fig. 2 - Interpolation of the HSV values |

The prototype can be downloaded here.

S. Cavaco, J.T. Henrique, M. Mengucci, N. Correia, F. Medeiros, Color sonification for the visually impaired, in Procedia Technology, M. M. Cruz-Cunha, J. Varajão, H. Krcmar and R. Martinho (Eds.), Elsevier, volume 9, pages 1048-1057, 2013.

S. Cavaco, M. Mengucci, J.T. Henrique, N. Correia, F. Medeiros, From Pixels to Pitches: Unveiling the World of Color for the Blind, in Proceeding of the IEEE International Conference on Serious Games and Applications for Health (SeGAH), 2013. (Best Paper Award.)

M. Mengucci, J.T. Henriques, S. Cavaco, N. Correia, F. Medeiros, From Color to Sound: Assessing the Surrounding Environment, in Proceedings of the International Conference on Digital Arts (ARTECH), pages 345-348, 2012.

S. Cavaco, J.T. Henrique, M. Mengucci, N. Correia, F. Medeiros, SonarX - Ver Através do Som, in Ponto e Som, Biblioteca Nacional de Portugal, n.157, pages 11-17, 2013. Also available in braille.

T. Henriques, M. Mengucci, F. Medeiros, S. Cavaco, N. Correia, See-Through-Sound - Bridging Sensory Worlds, SUNY at Buffalo State Faculty Research and Creativity Forum , October 2012.