We are currently working on applications for health, such as serious games and toolsets that explore HCI techniques and bio-feedback for speech therapy. Our goal is to leverage on speech and facial expression recognition combined with gaming in order to improve the effectiveness of speech therapy processes, as developed by healthcare specialists. This work is being developed with the collaboration of researchers from Carnegie Mellon University, INESC-ID, Escola Superior de Saúde de Alcoitão and VoiceInteraction. More details in the BioVisualSpeech project page.

Education

We are also currently working on serious games and applications for education for blind students, such as educational computer games, orientation and mobility computer games that use spatialized sound, and a molecular editor with spatialized sound. Some of the results appear in Ferreira and Cavaco (FIE 2014) and Simões and Cavaco (ACE 2014) (which won the Bronze poster award).

We have also been working on the synthesis of spatialized sound (2D or 3D) for applications for the blind.

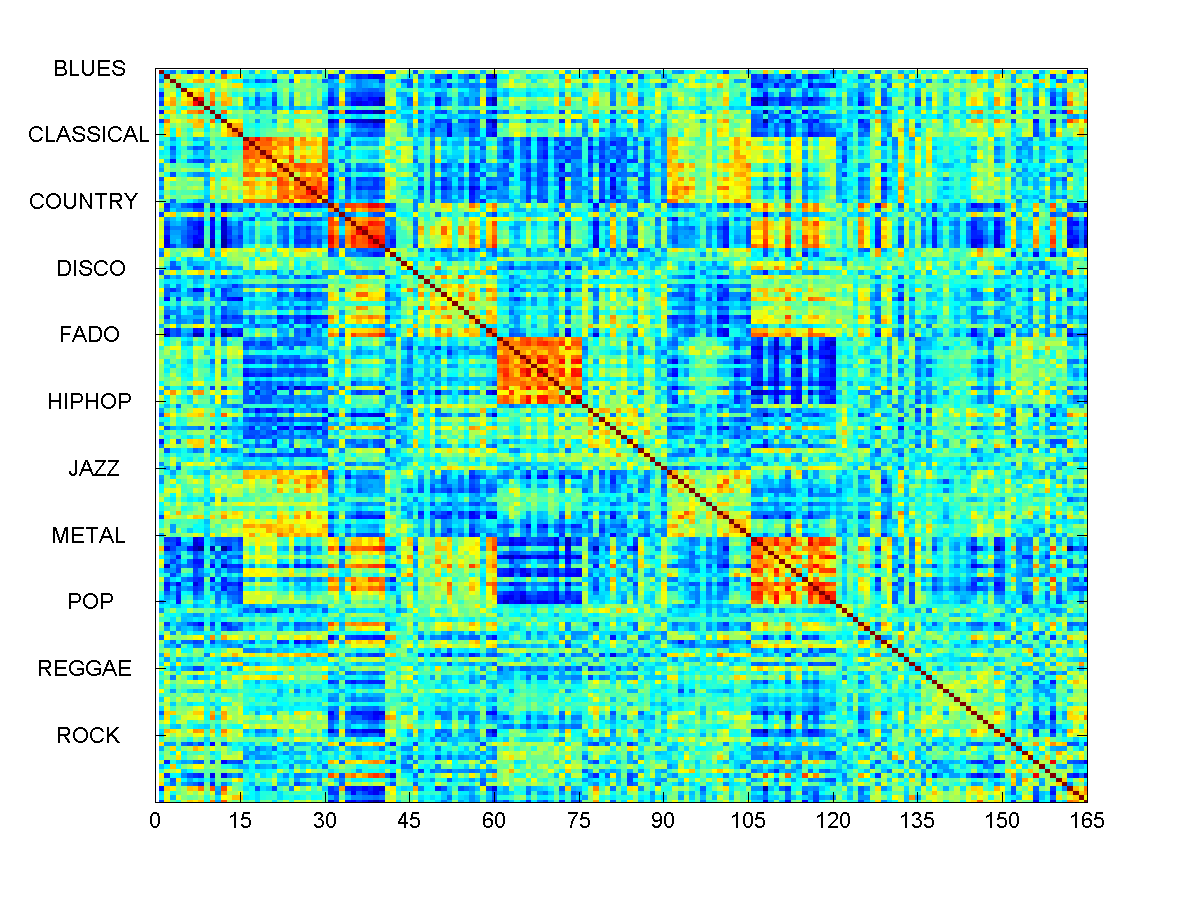

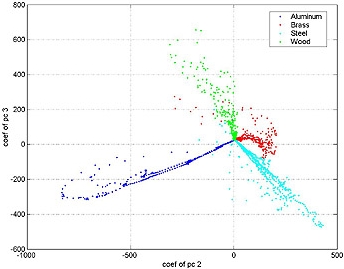

Most musical instrument classifiers focus on distinguishing different harmonic instruments such as the violin and the flute, whose sounds have very different characteristics. On the other hand, much less attention has been given to percussion instruments, especially if we consider the discrimination of instruments of the same type, like the cymbals in a drum kit. We have been developing classifiers that are able to distinguish this latter type of instruments. In particular we have been working with cymbal sounds and we are interested in modeling, classification, transcription and synthesis of these sounds. Some of the results appear in Cavaco and Almeida (IWSSIP 2012).

Harmonic instruments

Apart from the work with percussion instruments we have also been developing models that describe sounds from harmonic instruments, such as the flute, piano and guitar. Some of the results appear in Malheiro and Cavaco (INForum 2011).

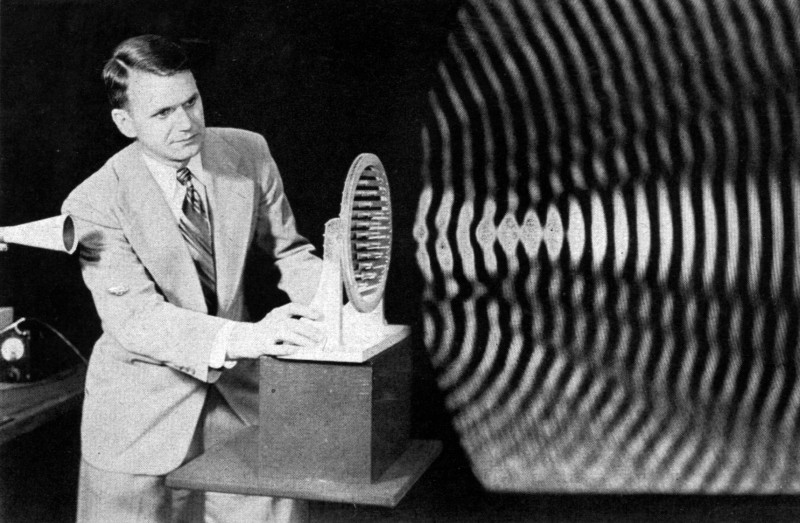

Natural sounds of the same type have a rich variability in their acoustic structure. For example, different impacts on the same rod can generate very different acoustic waveforms. In natural environments there is variability due to reverberation and background noise, but even when the sounds are recorded in anechoic conditions there is variability that is due to factors such as the slight variations in the impact force and location. (For instance, the figure on the left shows that, even though different impacts on the same rod have very similar spectra, the relative power and duration of the partials varies from one instance to the other. These differences cannot be explained by a simple variation in amplitude.) In spite of these variations, when the sounds are heard they are often perceived as almost identical, meaning that they have some common intrinsic structures.

We are developing data-driven methods for learning the intrinsic features that govern the acoustic structure of impact sounds. These methods require no a priori knowledge of the physics, dynamics and acoustics, and are used to create models of impact sounds that represent a rich variety of structure and variability in the sounds. For more details see Cavaco and Lewicki (JASA 2007).

In the past, we have also worked on sound recognition for robots. More specifically, we have worked on the recognition of sounds from toys for Kismet (a robot from MIT AI lab).